Yuejie Chi

ECE 18-813B: Special Topics in Artificial Intelligence: Foundations of Reinforcement Learning

This course aims to present a coherent framework that covers important algorithmic developments in modern RL, highlighting the connections between new ideas and classical topics. Employing Markov Decision Processes (MDPs) as the central mathematical framework, we will cover multiple important scenarios including but not limited to the simulator setting, online RL, offline RL, and multi-agent RL, gravitating our discussions around issues such as sample complexity, computational efficiency, function approximation, distributional robustness, as well as information-theoretic and algorithmic-dependent lower bounds.

Course Syllabus

Recommended Readings

-

Reinforcement Learning: Theory and Algorithms, by Agarwal, Jiang, Kakade, and Sun

-

Reinforcement learning: An introduction, by Sutton and Barto

-

Bandit Algorithms, by Lattimore and Szepesvari

Reinforcement Learning, by Silver

-

Algorithms for Reinforcement Learning, by Szepesvari

Lecture Notes

Note: some figures in the slides are taken from the internet or public resources, without proper acknowledgement. I apologize for these omissions in advance, which will be fixed at a later time.

-

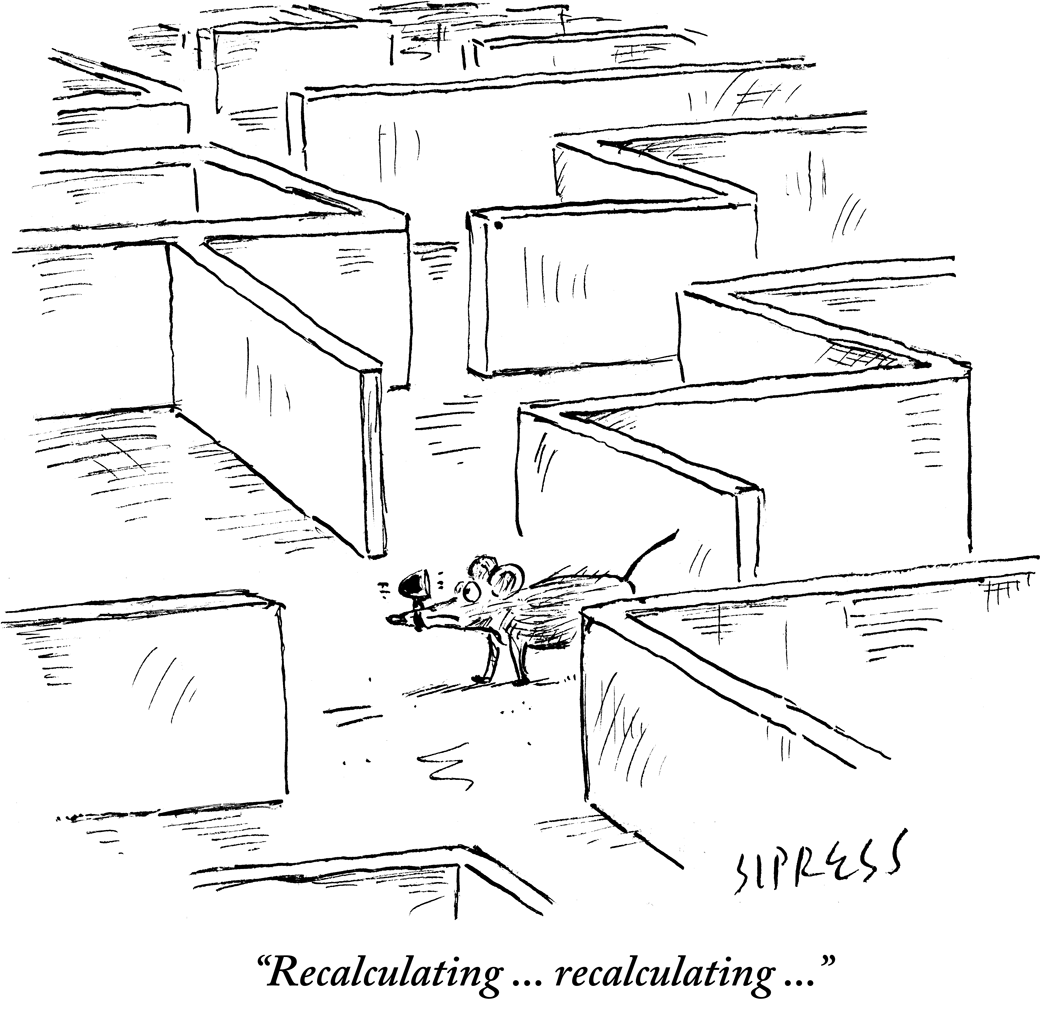

Lecture 1: Introduction

Readings: [Sutton and Barto, Chapter 1] -

Lecture 2: Multi-arm bandits: stochastic bandits

Readings: [Lattimore and Szepesvari, Chapters 4 and 7] -

Lecture 3: Multi-arm bandits: adversarial bandits

Readings: [Lattimore and Szepesvari, Chapter 11] -

Lecture 4: Multi-arm bandits: lower bounds

Readings: [Lattimore and Szepesvari, Chapters 13-16] -

Lecture 5: Markov decision processes: basics

Readings: [AJKS, Chapter 1] [Silver's Lectures 1 and 2] -

Lecture 6: Markov decision processes: dynamic programming

Readings: [AJKS, Chapter 1] [Silver's Lecture 3] -

Lecture 7: Model-based RL with simulators

Readings: [AJKS, Chapter 2] [Li et al, 2020] -

Lecture 8: Model-free RL: Monte Carlo and TD learning

Readings: [Silver's Lecture 4] -

Lecture 9: Model-free RL: Q-learning

Readings: [Sample complexity of Q-learning] -

Lecture 10: Online RL: Monte Carlo, Sarsa and Q-learning with GLIE

Readings: [Sutton and Barto, Chapter 6] [Silver's Lecture 5] -

Lecture 11: Online RL: regret analysis and algorithms

Readings: [Lower bound] [UCB-VI] [UCB-Q] -

Lecture 12: Policy optimization: REINFORCE, PG and NPG

Readings: [Policy gradient methods] [AJKS, Chapters 11-12] -

Lecture 13: Policy optimization: the role of regularization

Readings: [Entropy-regularized NPG] [PMD] [GPMD] -

Lecture 14: Imitation learning

Readings: [AJKS, Chapters 15] [Ross and Bagnell, 2010] [Ross and Bagnell, 2011] -

Lecture 15: Offline RL: pessimism

Readings: [Rashidinejad et al, 2021] [Shi et al, 2022] [Li et al, 2022] -

Lecture 16: Linear function approximation

Readings: [Sutton and Barto, Chapter 9] [Tsitsiklis and Van Roy, 1997] [Bhandari et al, 2018] -

Lecture 17: The deadly triad and actor-critic

Readings: [Sutton and Barto, Chapter 11] [AJKS, Chapter 13] [Silver's Lecture 13] -

Lecture 18: linear MDP

Readings: [AJKS, Chapter 8] [Planning under local access] -

Lecture 19: MARL: statistical perspective

Readings: [V-learning] [Minimax-optimal] -

Lecture 20: MARL: optimization perspective

Readings: [Matrix game] [Markov game]

Suggested Readings for Presentations/Projects (incomplete)

You can also select another paper upon the instructor's approval.

Constrained MDP: CRPO, sample complexity, multi-objective RL

-

Robust MDP: sample complexity, policy optimization, corruption-robust RL

Risk-sensitive RL: regret

Convex MDP: reward is enough

Multi-agent RL: V-learning, CCE learning

Representation learning: decision-estimation coefficient, contrastive representation learning, low-rank MDP

policy gradient methods: general theory, PG for control, variance-reduced PG

Offline RL: doubly-robust OPE, pessimism, offline RL with realizability, actor-critic, adversarial model

Additional topics: RLHF, the role of coverage, POMDP