Behavior-Aware Computing

The commoditization of sensor technologies coupled with advances in modeling user behavior offer us new opportunities for simplifying and strengthening authentication. We envision a new mobile system framework, SenSec, which uses passive sensory data to ensure application security: SenSec is constantly collecting sensory data from accelerometer, gyroscope, GPS, WiFi, microphone or even camera. Through analyzing the sensory data, it constructs the context under which the mobile device is used including locations, movements and usage patterns, etc. From the context, the system can calculate the certainty that the system is at risk. We then compare the certainty with the sensitivity levels for different mobile applications. For those applications of which sensitivity passes the certainty threshold, authentication mechanism would be employed before the application is invoked to ensure security policy for that application. Read more from my publications

Social Media Analysis and its applications

Twitter has increasingly become an important source of information during disasters. Authorities have responded by providing related information in Twitter. The same information channel can also be used to deliver disaster preparation information to increase the disaster readiness of the general public. Retweeting is the key mechanism to facilitate this information diffusion process. Understanding of factors that affect twitter users' retweet decision would help the authority to adopt an optimal strategy in choosing the content, style, key words, initial targeted users, time and frequency. This helps optimizing the communications of disaster messages given the unique characteristics of the Twitter medium. As a result, it will speed up the information propagation to save more lives. We present the analysis of user's retweeting behavior by studying the factors that may affect this decision, including context influences, network influences and time decaying factors. We aim to build a fine-grained predictive model for retweeting. Specifically, given a tweet, we would like to predict the retweeting decision of each user within a targeted network. Read more from my publications or [1] [2]

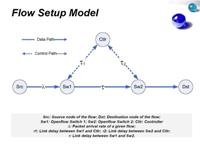

An Analytical Study: Deploying OpenFlow in Large Networks

The operation model of OpenFlow usually infers that the control of the flow decision is made remotely on an OpenFlow Controller. This model introduces delay with regard to the flow decision on the ingress switch, which implies certain buffering requirement. In order to successfully deploy OpenFlow in service provider and large enterprise networks, we argue that we need to fully understand the above problems and come up with a systematic way to decide whether it is feasible to deploy OpenFlow in a given network environment with certain QoS requirements. In this paper, we construct a Flow Setup Model to model and analyze these problems. Sensitivity analysis, simulation results and testing results on real systems show that OpenFlow can be deployed in large scale network providing carefully choosing the controller's location based on the traffic pattern and network topology and traffic patterns. This study gain insights to efficiently compare different deployment options and optimize the deployment plan under the budget and infrastructure constraints.

POMI Directory Service Design: A White Paper

POMI Initiatives calls for a scalable directory service (DS) architecture. The DS would be used to find entities, including subscribers, devices and services, on the internet and connect to them, very much like how DNS is used today, but could allow for rapid mobility of the entities across multiple points of attachment in the networks while maintaining connectivity with certain QoS guarantees, such as improving bandwidth, minimizing delay and packet loss.

This paper first motivates the fresh design of the DS for POMI by illustrating the key problems with the legacy DS. It then explores the DS requirements under certain use case assumptions. Based on these requirements, it evaluates the feasibility of several possible proposed DS architectures and their implications on networks, storage and computing. The paper finally initiates some open-end discussions on the design issues of DS for POMI and further motivates on implementations and benchmarking of various ideas.

Making Large-scale Deployment of RCP Practical for Real Networks

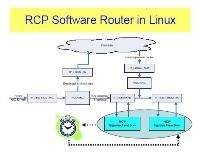

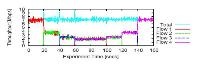

We recently proposed the Rate Control Protocol (RCP) as a way to minimize download times (or flow-completion times). Simulations suggest that if RCP were widely deployed, downloads would frequently finish an order of magnitude faster than with TCP. This is because RCP involves explicit feedback from the routers along the path, allowing a sender to pick a fast starting rate, and adapt quickly to network conditions. RCP is particularly appealing because it can be shown to be stable under broad operating conditions, and its performance is independent of the flow-size distribution and the RTT. Although it requires changes to the routers, the changes are small: The routers keep no per-flow state or per-flow queues, and the per-packet processing is minimal.

However, the bar is high for a new congestion control mechanism -- introducing a new scheme requires enormous change, and the argument needs to be compelling. And so, to enable incremental deployment of RCP, we have built and tested an open and public implementation of RCP, and proposed solutions for deployments that require no fork-lift network upgrades.

In this paper (in PDF) we describe our end-host and router implementation of RCP in Linux, and solutions to how RCP can coexist in a network carrying predominantly non-RCP traffic, and coordinate with routers that don't implement RCP. We hope that these solutions will take us closer to having an impact in real networks, not just for RCP but also for many other explicit congestion control protocols proposed in literature.