ARENA Digital Twin Platform

The ARENA (Augmented Reality Edge Network Architecture) is a dynamic, web-based platform designed for multi-user and multi-agent extended reality (XR) environments. It simplifies the process of developing and hosting applications that engage users and agents within a 3D immersive environment accessible through browsers. ARENA offers functionalities like content discovery based on location, networked entities for content manipulation, and input/output for users and devices with built-in access control. It is an easy way to execute networked and sandboxed applications at scale that run on desktop computers, mobile platforms, and AR/VR headsets.

[ arenaxr.org ] [ ISMAR21 ] [ Hybrid Experiences ] [ VR Chat ] [ Physical-Virtual Video ] [ ISMAR23 ] [ VR24 ]

Lightweight Virtualization and Orchestration at the Edge

A paradigm shift in how we design distributed computing systems is almost inevitable. With advanced compute, networking, sensing, and actuation, Edge devices are increasingly capable. At the same time, there is a desire to expand some of the cloud infrastructure to the edge. We have been exploring how ByteCode Virtualization technologies like WebAssembly can be used to modernize embedded system design and deployment workflows.

[ GitHub ]

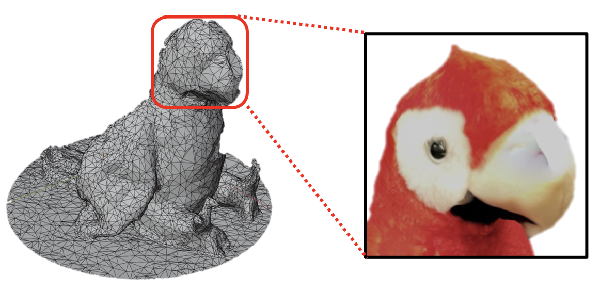

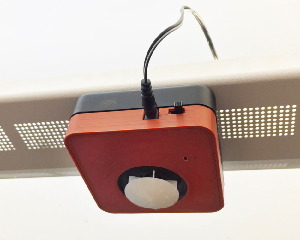

Volumetric Scene Capture and Streaming

We are working on real-time volumetric capture, streaming, and display for use in XR environments.

[ VR24 ]

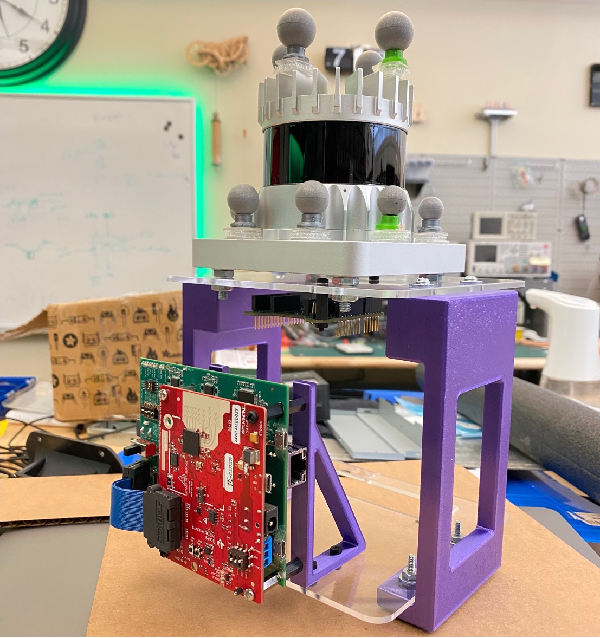

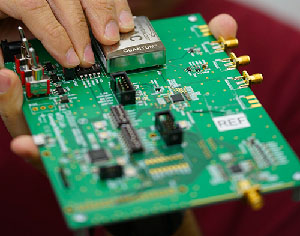

High Resolution mmWave Sensing

We have a number of projects exploring how machine learning can be used to upsample single-chip mmWave radar sensing. We started off with displacement sensing using retroreflector tags and are now exploring point cloud and 3D geometry generation.

[ MobiCom21 ] [ ICRA23 ] [ IPSN23 ] [ RadarHD ] [ HeadCount Teaser ]

Instant-on Camera-based Mobile Localization

Public spaces like concert stadiums and sporting arenas are ideal venues for AR content delivery to crowds of mobile phone users. Unfortunately, these environments tend to be some of the most challenging in terms of lighting and dynamic staging for vision-based relocalization. We have been working on a number of systems with the goal of accessible instant-on 6-DOF mobile phone localization.

[ VR24 ] [ IPSN20 ] [ ISMAR21 ] [ FlashTalk ] [ FlashDemo ]

mmWave Tire Wear Sensing

Tire wear is a leading cause of automobile accidents globally. Beyond safety, tire wear affects performance and is an important metric that decides tire replacement, one of the biggest maintenance expense of the global trucking industry. This project presents Osprey, the first on-automobile, mmWave sensing system that can measure accurate tire wear continuously and is robust to road debris. Osprey’s key innovation is to leverage existing, high-volume, automobile mmWave radar, place it in the tire well of automobiles, and observe reflections of the radar’s signal from the tire surface and grooves to measure tire wear, even in the presence of debris

[ MobiSys20 ]

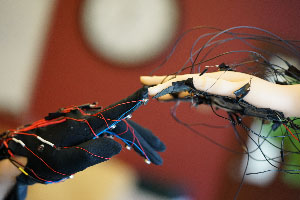

Mixed Reality

Current state-of-the-art AR, MR and VR systems show tremendous promise in applications ranging from entertainment and telerobotics, to pervasive computing and telepresence. Unfortunately, existing systems remain largely decoupled from the physical world, interactivity remains cumbersome and imprecise for end users, and participants lack a realistic representation of themselves. High-fidelity 3D acquisition and processing of physical environments, objects, and human performances are still bound to computation-intensive workflows, and live streaming is only possible with dedicated high-throughput network infrastructure as well as professional capture studios. We are working to bridge this gap by developing the infrastructure that makes virtual objects both persistent across space and time and accessible to multiple users at once.

[ CONIX ] [ Multi-User AR ] [Relocalization ]

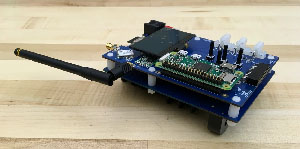

Low Power Wide-Area Networking

OpenChirp is a management framework on top of LoRaWAN that provides data context, storage, visualization, and access control over the web. LoRaWAN is an emerging Low-Power Wide-Area Networking (LP-WAN) protocol designed to support battery-operated devices that want to send just a few bytes of data but over long distances. Devices typically transmit 1-2km in urban areas and up to 15km in open space. OpenChirp is currently powering a LoRaWAN Network that started at Carnegie Mellon University but has spread to the City of Pittsburgh and beyond.

[ OpenChirp.io ] [ MobiSys20 ] [ IPSN18 ]

Infrastructure-free Localization for First Responders

In this project we are developing a rapidly deployable infrastructure-free localization system to track firefighters inside of a structure such as a building. Our goal is to provide fire safety chiefs who are responsible for team accountability a live feed on a tablet or computer outside of the facility that can show the position of each firefighter within. Given the hostile nature of burning structures and the time criticality of missions, this requires that a system can track firefighters without any pre-installed internal and limited external infrastructure, and without assuming knowledge of the structure’s layout. For a system to be practically adopted at scale, it also needs to be low-cost and extremely simple to configure and deploy.

[ IPSN22 ]

Previous Projects (no longer active)

GridBallast: Open Source Smart Water Heater (2020)

This is an ARPA-E funded collaboration between CMU, NRECA, Sparkmeter and EATON to develop an open-source next generation electric water heater and plug load controller. Each device will learn the load profile of the local grid through frequency sensing and learn user consumption patterns over time which can be used to shift peak loads. We are developing an open hardware platform that uses the CTA-2045 standard to communicate with smart grid-enabled devices. The term “GridBallast” refers to the idea that appliances in a home can act like a storage system.

[ GridBallast Website | CMU Hardware ]

Active Acoustic Sensing (2016)

In this project we are designing a platform for low-power real-time sensing of the shape and number of occupants in indoor spaces. The system transmits a wide-band ultrasonic signal into a room and then processes the superposition of the reflections recorded by a microphone. The system has two modes of operation, one for presence detection and one for estimating the number of occupants in a region. The presence detection uses the difference between multiple transmissions in succession with a set of general classifiers that make a binary decision about if the room contains occupants.

[ BuildSys 2016 ]

Pulsar: Nano Second Wireless Time Synchronization (2017)

Pulsar is a wireless time transfer platform that can achieve clock synchronization to better than five nanosecond between indoor or GPS-denied devices. Nanosecond-level clock synchronization is a missing capability for many real-time applications like next-generation wireless systems that leverage spatial multiplexing to improve channel capacity and provide services like time-of-flight localization. With fine- grained synchronization, both clock stability and propagation delays introduce significant sources of error. Pulsar leverages a stable clock source derived from a Chip-Scale Atomic Clock (CSAC) along with an Ultra-WideBand (UWB) radio able to perform sub-nanosecond packet time-stamping to estimate and correct for clock offsets.

[ RTAS 2017 ]

ALPS: Indoor Ranging for Mobile Devices (2018)

ALPs is an indoor ultrasonic ranging technique that can be used to localize modern mobile devices like smartphones and tablets. The method uses a communication scheme in the audio bandwidth just above the human hearing frequency range where mobile devices are still sensitive.

[ Website | SenSys 2012 | SenSys 2015 | Demo Video ]

Rural Microgrids (2016)

We are currently working on using wireless communication to help make electricity accessible in underserved markets. Our hardware is currently being used in a wirelessly managed microgrid deployment in rural Les Anglais, Haiti. The system consists of a cloud-based monitoring and control service, a local embedded gateway infrastructure and a mesh network of wireless smart meters deployed at 52 buildings. Each smart meter device has an 802.15.4 radio that enables remote monitoring and control of electrical service. The meters communicate over a scalable multi-hop TDMA network back to a central gateway that manages load within the system. The gateway also provides an 802.11 interface for an on-site operator and a cellular modem connection to a cloud-backend that manages and stores billing and usage data. The cloud backend allows occupants in each home to pre-pay for electricity at a particular peak power limit using a text messaging service. The system activates each meter within seconds and locally enforces power limits with provisioning for theft detection. We believe that this fine-grained micro-payment model can enable sustainable power in otherwise unfeasible areas.

[ Website | IPSN 2014 | ICCPS 2016 | Sparkmeter ]

Visual Light Landmarks (2014)

The omnipresence of indoor lighting makes it an ideal vehicle for pervasive communication with mobile devices. In this paper, we present a communication scheme that enables interior ambient LED lighting systems to send data to mobile devices using either cameras or light sensors. By exploiting rolling shutter camera sensors that are common on tablets, laptops and smartphones, it is possible to detect high-frequency changes in light intensity reflected off of surfaces and in direct line-of-sight of the camera. We present a demodulation approach that allows smartphones to accurately detect frequencies as high as 8kHz with 0.2kHz channel separation. In order to avoid humanly perceivable flicker in the lighting, our system operates at frequencies above 2kHz and compensates for the non-ideal frequency response of standard LED drivers by adjusting the light’s duty-cycle.

[ Website | IPSN 2014 | Slides]

Sensor Andrew: A Living Laboratory for Infrastructure Sensing (2012)

Sensor Andrew is a multi-disciplinary campus-wide scalable sensor network that is designed to host a wide range of sensing and low-power applications. The goals of Sensor Andrew are to support ubiquitous large-scale monitoring and control of infrastructure in a way that is extensible, easy to use, and provides security while maintaining privacy. Target applications currently being developed on the Project Anonymous network include infrastructure monitoring, first-responder support, quality of life for the disabled, water distribution systems monitoring and optimization, building power monitoring and control, social networking, and biometric sensors for campus security. Sensor Andrew is now powered by the Mortar.io platform.

[Project Website][Tech Report][IBM Journal][IPSN Demo]

Drone-RK: A Real-Time Distributed UAV Platform (2012)

Drone-RK is an open-source real-time distributed UAV development infrastructure that focuses on the software infrastructure required for self-contained autonomous UAV application development. Drone-RK currently runs on the Parrot AR.Drone hardware platform. Drone-RK provides Resource Kernel (RK) extensions to the standard Linux kernel that provide real-time scheduling extensions such that tasks in the system can specify their resource demands such that the operating system can provide timely, guaranteed and controlled access to system resources (CPU, network, sensors and actuators). The Drone-RK development platform provides APIs for local sensing, control and processing as well as various demonstration applications. In order to support rich autonomous behaviors, the platform provides hooks to incorporate additional hardware components (GPS, digital compasses, ultrasonic ranging, etc).

[Project Website] [ICCPS 2012]

Distributed time-series data management (2013)

As sensor networks gain traction and begin to scale, we will be increasingly faced with challenges associated with managing large-scale time-series data. We present a cloud-to-edge partitioned architecture called Respawn that is capable of serving large amounts of time-series data from a continuously updating datastore with access latencies low enough to support interactive real-time visualization. Respawn targets sensing systems where resource-constrained edge node devices may only have limited or intermittent network connections linking them to a cloud-backend. The cloud-backend provides aggregate storage and transparent dispatching of data queries to edge node devices. Data is downsampled as it enters the system creating a multi-resolution representation capable of low- latency range-base queries. Lower-resolution aggregate data is automatically migrated from edge nodes to the cloud-backend both for improved consistency and caching. In order to further mask latency from users, edge nodes automatically identify and migrate blocks of data that contain statistically interesting features.

[Website | RTSS 2013]

WaterBot (2010)

WaterBot is a real-time conductivity sensor and data logger, designed to enable inexpensive and convenient monitoring of well and watershed systems with high temporal frequency and high spatial density. Conductivity of water is an indirect measure of Total Disolved Solids (TDS), which is a frontline indicator of water quality. Collecting baseline data and setting up mobile sensor networks in areas impacted by water source contamination is essential for citizen science and civic monitoring projects.

[Project Website]

CMUcam4 (2012)

The CMUcam4 is a fully programmable embedded computer vision sensor. In the latest version of the CMUcam, we simplified the design by removing the FIFO memory buffer. The main processor is the Parallax P8X32A (Propeller Chip) connected to an OmniVision 9665 CMOS camera sensor module. For more information please see CMUcam4 wiki

[Project Website]

Nano-RK: A Real-Time Wireless Sensor Network Operating System (2008)

Nano-RK is a reservation-based real-time operating system (RTOS) with multi-hop networking support for use in wireless sensor networks. Nano-RK currently runs on the FireFly Sensor Networking Platform as well as the MicaZ motes. It includes a light-weight embedded resource kernel (RK) with rich functionality and timing support using less than 2KB of RAM and 16KB of ROM. Nano-RK supports fixed-priority preemptive multitasking for ensuring that task deadlines are met, along with support for CPU, network, as well as, sensor and actuator reservations. Tasks can specify their resource demands and the operating system provides timely, guaranteed and controlled access to CPU cycles and network packets. Together these resources form virtual energy reservations that allows the OS to enforce system and task level energy budgets.

[Project Website] [RTSS 05 | Slides ] [RHS | Slides]

Low-power Clock Synchronization using AC Power Lines (2013)

In this work, we present a novel low-power hardware module for achieving global clock synchronization by tuning to the magnetic field radiating from existing AC power lines. This signal can be used as a global clock source for battery-operated sensor nodes to eliminate drift between nodes over time even when they are not passing messages. With this scheme, each receiver is frequency-locked with each other, but there is typically a phase-offset between them. Since these phase offsets tend to be constant, a higher-level compensation protocol can be used to globally synchronize a sensor network.

[Project Website] [SenSys 09 | Slides]

Building Power Monitoring and Control (2010)

The Sensor Andrew Gateway Agent (SAGA) Sensor Network Dashboard is a web interface that provides users with a way to view, actuate and manage a subnet of wireless sensor nodes. The front-end is designed to run locally on a router acting as a gateway between the sensor network and the Internet. Information collected from sensor nodes is stored locally for quick retrieval even if outside network connectivity is lost or unavailable. When network connectivity is available, data can be pushed through use of the Sensor Andrew infrastructure to external agents which can for example archive historical events or perform higher-level processing. This also enables secure bi-directional communication to gateways behind firewalls or with dynamic IP address like those found in broadband connected homes. The web interface allows devices to be easily configured with aliases and grouped together based on type. Individual and groups of sensors can be plotted to show relative comparisons of sensor values. For example, plotting a group of energy metering devices will show a comparison of which devices are consuming what fraction of the energy.

[Project Website] [IPSN 09 Demo]

Rate-Harmonized Scheduling for Saving Energy (2008)

Many modern power-aware processors and microcontrollers have built-in support for active, idle and sleep operating modes. In sleep mode, substantially more energy savings can be obtained but it requires a significant amount of time to switch into and out of that mode. Hence, a significant amount of energy is lost due to idle gaps between executing tasks that are shorter than the required time for the processor to enter the sleep mode. We present a technique called Rate- Harmonized Scheduling that naturally clusters task execution such that processor idle times are lumped together. We next introduce the Energy-Saving Rate-Harmonized Scheduler which guarantees that every idle duration on the processor can be used to put the processor into sleep mode. This property can be used to even eliminate the idle power mode in processors but nevertheless it is predictable, analyzable, and saves more energy.

[RTSS 08 | Slides]

FireFly Real-Time Sensor Networking Platform (2010)

The FireFly Sensor Networking Platform is a low-cost low-power hardware platform. In order to better support real-time applications, the system is built around maintaining global time synchronization. The main Firefly board uses an Atmel ATmega1281 8-bit micro-controller with 8KB of RAM and 128KB of ROM along with TI's (formerly Chipcon) CC2420 IEEE 802.15.4 standard-compliant radio transceiver for communication. The maximum packet size supported by 802.15.4 is 128 bytes and the maximum raw data rate is 250Kbps.

[Project Website][SECON 06][RTSJ 06]

CMUcam 3 (2007)

The goal of the CMUcam project is to provide simple vision capabilities to small embedded systems in the form of an intelligent sensor. The CMUcam3 extends upon this idea by providing a flexible and easy to use open source development environment that complements a low cost hardware platform. The CMUcam3 is an ARM7TDMI based fully programmable embedded computer vision sensor. The main processor is the NXP LPC2106 connected to an Omnivision CMOS camera sensor module. Custom C code can be developed for the CMUcam3 using a port of the GNU toolchain along with a set of open source libraries and example programs. Executables can be flashed onto the board using the serial port with no external downloading hardware required.

[Project Website][Technical Report]

FireFly Mosaic: Visual-Enabled Wireless Sensor Networks (2007)

FireFly Mosiac is a Vision-Enabled sensor network comprised of smart cameras that can perform distributed image processing tasks. In this work we demonstrate a system that can monitor people’s daily activities in the home. The system automatically combines information extracted from multiple overlapping cameras to recognize various regions in the house where particular activities frequently occur. Examples of such activities include washing dishes, preparing food, eating dinner, sitting on the couch or sleeping. Once these daily activity clusters are defined, the system builds a model which tracks the duration and transition frequency between various tasks. This information can be monitored over time to detect changes in behavior, or it can be used to give contextual clues to other in-home systems. For example, a fall detection system could use this type of context information to lower its probability of triggering if the user is sleeping or has a visitor in a nearby location.

[RTSS 07 | Slides]

Micro-climate Enhanced RF Localization (2007)

We propose micro-climate sensing as an effective means of enhancing conventional RF-based localization. Our system targets people tracking applications in dynamic indoor environments, such as nursing homes, hospitals and office spaces that require simple deployment and where conventional RF tracking may suffer from timevarying signal attenuation or dropped packets. RF-based localization approaches suffer as the environment changes over time. To help mitagate these effects we use time synchronized windows of sensor samples to associate a mobile node with its nearby beacon nodes. In assisted living environments sensor networks likely already have basic sensors to collect contextual information about users and to monitor the environment. We propose using light, humidity, temperature and audio data samples over a short window of time to model the micro-climate of a beacon node. Using micro-climate matching in conjunction with RF signal strength decreases the worst-case localization error significantly by a factor of more than 3 (from 25m to 8m) while making the system more resilient to environment changes. Micro-climate data helps ensure at least room level location tracking even in buildings like hospitals with many rooms in close proximity.

[SMC 07 | Slides]

RT-Link and Voice Streaming for Coal Mine Safety (2006)

RT-Link is a TDMA based multi-hop wireless sensor networking MAC protocol. It utilizes tight time synchronization from either in-band message passing, or an out-of-band AM carrier current beacon. RT-Link was deployed as part of a coal mine safety system that could track miner locations as well as stream voice in the event of a disaster, The sensor network functions with a low duty-cycle during normal operation and is able to switch over to a high-rate mode for voice communication during emergencies. RT-Link supports on-demand rate control and can switch the networks operation based on the current application's throughput and end-to-end delay requirements.

[Project Website][RTSS 06 | Slides][SECON 06 | Slides]

eWatch (2006)

eWatch is a wearable computing system that can be worn on your wrist. It has several sensors that gather information about the user and the environment. It can attract the user's attention with visual and/or tactile notifications. eWatch supports wireless Bluetooth communication which allows it to interface with a computer, PDA, or cellular phone. The wrist watch form factor allows open exposure to the environment making it ideal for ascertaining user state information. This also means that eWatch is instantly viewable and always accessible to the user.

[Project Website][BSN 06]

CMUcam 2 (2005)

The CMUcam2 includes all of the functionality of the original CMUcam in an enhanced form and a lot of new functionality. It uses an updated processor that has more RAM, more ROM, and more I/O pins. This allowed us to add functionality like frame differencing, edge detection, and color histogramming. The CMUcam2 also uses a frame buffer which allows for faster processing, multiple operations per frame, more control over communication speeds and better looking frame dumps.

[Project Website][ECV 05]

CMUcam 1 (2001)

An embedded color vision system. Basic image processing on a microcontroller. Checkout some really old videos of me at NASA ames.

[Project Website][IROS 02][Video]