This is an old revision of the document!

Table of Contents

FPGA Architecture for Computing

Field Programmable Gate Arrays (FPGAs) have been undergoing a dramatic transformation from a logic technology to a computing technology. Notwithstanding the rapid progress we have seen, there are still much untapped opportunities in FPGAs’ full computing potential. FPGAs are “programmable” devices like processors, but we have not seen FPGAs deployed, even for compute applications, with dynamism anywhere near processors. Research is needed to fully develop and exploit FPGAs’ programmability in a new usage modality divorced from FPGAs’ ASIC legacy. In this project, we ask the question: what should a future FPGA—intentionally architected as a computing substrate—look like. We are working towards answers that bring forth the FPGAs' undertapped capability in programmability and dynamism through investigations in new methodologies, usage modalities, and architecture.

Funding for this work has been provided, in part, by the National Science Foundation (CCF-1012851), Intel ISRA, and SRC/JUMP. We thank Altera, Xilinx and Bluespec for their donation of tools and hardware.

Students

- Eric Chung (PhD Thesis, 2011)

- Joseph Melber

- Marie Nguyen (PhD Thesis, 2020)

- Shashank Obla

- Michael Papamichael (PhD Thesis, 2015)

- Siddharth Sahay

- Gabriel Weisz (PhD Thesis, 2015)

- Yu Wang (PhD Thesis, 2018)

- Zhipeng Zhao

Efforts

Programmable and Dynamic Computing Deployment

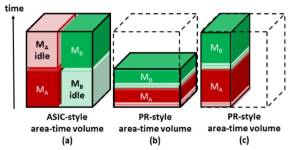

We are developing a dynamic partial reconfiguration (DPR) runtime environment to expand the dynamism and shareability of an FPGA in the domain of realtime, interactive computer vision applications. DPR allows one region of the FPGA logic fabric to be reprogrammed without interfering with the operations of the remaining regions. Thus, one could partition and manage an FPGA logic fabric as multiple DPR partitions that can be independently reconfigured at runtime. Making use of DPR, our runtime environment can support multiple vision pipelines to dynamically share an FPGA logic fabric both spatially and temporally. This enables more efficient provisioning and utilization of FPGA logic resources in a realtime and interactive setting when the applications and their requirements cannot be anticipated ahead of time. We have demonstrated a time-shared usage mode where the FPGA logic fabric is time-multiplexed by multiple vision processing pipelines within the timescale of individual video frames (10s of msec) (Nguyen, FPL2018). We have conducted benchmarking to quantify the benefits of dynamic FPGA mapping with DPR over static FPGA mapping, in terms of area/device cost, power and energy (Nguyen, FPL2019). While our work so far has focused on realtime vision processing, we are working to extend this dynamic approach to other computation applications and patterns.

To fully exploit FPGAs' power of programmability and dynamisim, FPGA designers need to adopt a new design perspective distinct from traditional ASIC thinking. DPR can be applied as a design optimization technique unique to FPGAs. FPGA designers have traditionally shared a similar design methodology with ASIC designers. Most notably, at design time, FPGA designers commit to a fixed allocation of logic resources to modules in a design. At runtime, some of the occupied resources could be left under-utilized due to hardto-avoid sources of inefficiencies (e.g., operation dependencies, unbalanced pipelines). With DPR as an optimization, FPGA resources can be re-allocated over time to reduce under-utilization with better area-time scheduling. (Nguyen, FPL 2020)

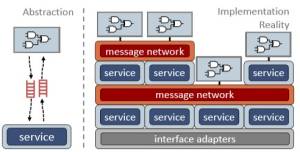

Service Oriented Memory Architecture

Prevailing memory abstractions and infrastructures that support FPGA application development continue to rely on the classic notions of loading and storing to memory address locations. Why should we limit our thinking to such a low-level, explicit paradigm when developing computing applications on an FPGA. Just like in software development, FPGA application developers should be supported by high-level abstractions that encapsulate in-memory data structures and meaningful operations on them. Under a Service-Oriented Memory Architecture, compute accelerator logic interacts with abstracted “memory objects” using a rich set of commands. The implementation complexities of the memory objects and operations, as well as the bare-mental-level memory interface, are hidden from the compute accelerator developers. The support for these memory objects and operations, realized as soft-logic datapapth modules, can be specialized to an application domain and can be provided in a reusable and extensible library. We currently are working on a proof-of-concept prototype environment and application demonstrators. (Melber, FPL 2020)

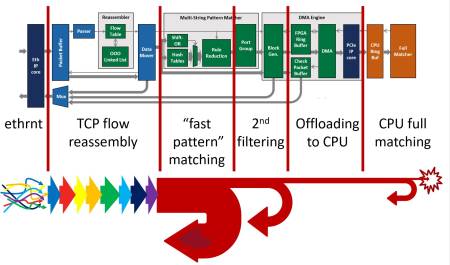

Network Function Acceleration

We begin our investigation by studying FPGA acceleration of Intrusion Detection System (IDS) and Intrusion Prevention System (IPS). (Zhao, OSDI 2020) Today’s state-of-the-art software intrusion detection systems (IDS) can process about 1 Gbps per high-end CPU core. This is an untenable starting point for an intrusion prevention system (IPS) that can operate inline with today’s 100Gbps links and respond in time to stop a malicious packet from propagating. In Project Pigasus, we are accelerating a 10K-rule IPS to 100 Gbps on one Stratix-10 MX FPGA. The effort required a very different design mindset from the software approach. We have been able to achieve 100Gbps by making effective use the Stratix-10 MX FPGA’s fast on-chip SRAM. For TCP reassembly, Pigasus uses dynamic allocation to compactly store packets in on-chip SRAM. Pigasus implements multistring “fast pattern” matching using small SRAM hash tables combined with another light-weight filter. In the current system, only the (not-yet-accelerated) full matching stage is offloaded to CPU cores in a stateless fashion.

We are looking to generalize the framework and components from the Pigasus IPS effort to other in-network compute opportunities. Future work is also to create a high-level domain-specific NF programming framework for use by networking experts who are not RTL experts. This is joint work with Justine Sherry and Vyas Sekar.

Complete opensourced code is available on github if you are interested in reproducing or reusing Pigasus IPs.

(See also my earlier work on a kind of programmable and FPGA "Smart" NIC).

CoRAM (Classic)

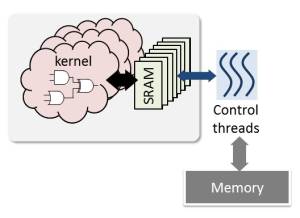

Our investigation into FPGA architecture for computing began in 2009 with the question: how should data-intensive FPGA compute kernels view and interact with external memory data. In response, we developed the original CoRAM FPGA computing abstraction. The goal of the CoRAM abstraction is to present the application developer with (1) a virtualized appearance of the FPGA’s resources (i.e., reconfigurable logic, external memory interfaces, and on-chip SRAMs) to hide low-level, non-portable platform-specific details, and (2) standardized, easy-to-use high-level interfaces for controlling data movements between the memory interfaces and the in-fabric computation kernels. Besides simplifying application development, the virtualization and standardization of the CoRAM abstraction also make possible portable and scalable application development.

- Our initial concept is based on factoring out the concerns for data orchestration from the compute kernels. The in-fabric computation kernels interacting with only the simple on-chip SRAM blocks for data input and output (Chung, FPGA'2011). Separately, a set of control threads—expressed in a multithreaded C-like language—manage (1) the data movements between the off-chip DRAM and on-chip SRAMs, and also (2) the invocations of the kernels over time. The CoRAM compiler automatically infers and synthesizes from the control threads both the required data transfer paths and the state-machine controllers in support of the computation kernels.

- We later introduced a soft-logic CoRAM abstraction layer with further elevated kernel and control thread application-level interfaces that directly support the high-level semantics of commonly-used in-memory data structure types (e.g., streams, arrays, linked lists, and trees) (Weisz, FPL'2015).

Publications

- Zhipeng Zhao, Hugo Sadok, Nirav Atre, James C. Hoe, Vyas Sekar, and Justine Sherry. Achieving 100Gbps Intrusion Prevention on a Single Server. Proc. USENIX Symposium on Operating Systems Design and Implementation (OSDI), November 2020. (pdf)

- Marie Nguyen, Nathan Serafin, and James C. Hoe. Partial Reconfiguration for Design Optimization. Proc. IEEE International Conference on Field-programmable Logic and Applications (FPL), September 2020. (pdf)

- Joe Melber and James C. Hoe. A Service-Oriented Memory Architecture for FPGA Computing. Proc. IEEE International Conference on Field-programmable Logic and Applications (FPL), September 2020. (pdf)

- Marie Nguyen, Robert Tamburo, Srinivasa Narasimhan, and James C. Hoe. Quantifying the Benefits of Dynamic Partial Reconfiguration for Embedded Vision Applications. Proc. International Conference on Field-programmable Logic and Applications (FPL), September 2019. (pdf)

- Yu Wang, James C. Hoe, and Eriko Nurvitadhi. Processor Assisted Worklist Scheduling for FPGA Accelerated Graph Processing on a Shared-Memory Platform. Proc. Symposium on Field-Programmable Custom Computing Machines (FCCM), May 2019. (pdf)

- Yu Wang. Accelerating Graph Processing on a Shared Memory FPGA System. PhD Thesis, December 2018. (pdf)

- Marie Nguyen and James C. Hoe. Time-Shared Execution of Realtime Computer Vision Pipelines by Dynamic Partial Reconfiguration. Proc. International Conference on Field-programmable Logic and Applications (FPL), September 2018. (pdf, 8-page version at arXiv1805.10431)

- Marie Nguyen and James C. Hoe. Amorphous Dynamic Partial Reconfiguration with Flexible Boundaries to Remove Fragmentation. October 2017. (arXiv:1710.08270)

- Zhipeng Zhao and James C. Hoe. Using Vivado-HLS for Structural Design: a NoC Case Study. Unpublished Tech Report, February 2017. (arXiv:1710.10290, source)

- James C. Hoe. Technical Perspective: FPGA Compute Acceleration Is First about Energy Efficiency. Communications of the ACM, November 2016. acm

- Gabriel Weisz, Joseph Melber, Yu Wang, Kermin Fleming, Eriko Nurvitadhi and James C. Hoe. A Study of Pointer-Chasing Performance on Shared-Memory Processor-FPGA Systems. Proc. ACM International Symposium on Field-Programmable Gate Arrays (FPGA), February 2016. (pdf)

- Michael K. Papamichael and James C. Hoe. The CONNECT Network-on-Chip Generator. IEEE Computer, December 2015. (ieee)

- Gabriel Weisz and James C. Hoe. CoRAM++: Supporting Data-Structure-Specific Memory Interfaces for FPGA Computing. Proc. International Conference on Field-programmable Logic and Applications (FPL), September 2015. (pdf)

- Michael Papamichael. Pandora: Facilitating IP Development for Hardware Specialization. PhD Thesis, August 2015. (pdf)

- Gabriel Weisz. CoRAM++: Supporting Data-Structure-Specific Memory Interfaces in FPGA Computing. PhD Thesis, August 2015. (pdf)

- Michael K. Papamichael, Peter Milder and James C. Hoe. Nautilus: Fast Automated IP Design Space Search Using Guided Genetic Algorithms. Proc. Design Automation Conference (DAC), June 2015. (pdf)

- Michael K. Papamichael, Cagla Cakir, Chen Sun, Chia-Hsin Owen Chen, James C. Hoe, Ken Mai, L. Peh, Vladimir Stojanovic. DELPHI: A Framework for RTL-Based Architecture Design Evaluation Using DSENT Models. Proc. International Symposium on Performance Analysis of Systems and Software (ISPASS), March 2015. (pdf)

- Eriko Nurvitadhi, Gabriel Weisz, Yu Wang, Skand Hurkat, Marie Nguyen, James C. Hoe, José F. Martinez, and Carlos Guestrin. GraphGen: An FPGA Framework for Vertex-Centric Graph Computation. Proc. Symposium on Field-Programmable Custom Computing Machines (FCCM), May 2014. (pdf)

- Shinya Takamaeda-Yamazaki, Kenji Kise and James C. Hoe. PyCoRAM: Yet Another Implementation of CoRAM Memory Architecture for Modern FPGA-based Computing. Third Workshop on the Intersections of Computer Architecture and Reconfigurable Logic (CARL), December 2013. (pdf)

- Eric Chung and Michael Papamichael. ShrinkWrap: Compiler-Enabled Optimization and Customization of Soft Memory Interconnects. Proc. Symposium on Field-Programmable Custom Computing Machines (FCCM), April 2013. (pdf)

- Gabriel Weisz and James C. Hoe. C-To-CoRAM: Compiling Perfect Loop Nests to the Portable CoRAM Abstraction. Proc. ACM International Symposium on Field-Programmable Gate Arrays (FPGA), February 2013. (pdf)

- Berkin Akın, Peter A. Milder, Franz Franchetti and James C. Hoe. Memory Bandwidth Efficient Two-Dimensional Fast Fourier Transform Algorithm and Implementation for Large Problem Sizes. Proc. International Symposium on Field-Programmable Custom Computing Machines (FCCM), April 2012. (pdf)

- Michael Papamichael and James C. Hoe. CONNECT: Re-Examining Conventional Wisdom for Designing NoCs in the Context of FPGAs. Proc. ACM International Symposium on Field-Programmable Gate Arrays (FPGA), February 2012. (pdf)

- Eric S. Chung, Michael K. Papamichael, Gabriel Weisz, James C. Hoe, and Ken Mai. Prototype and Evaluation of the CoRAM Memory Architecture for FPGA-Based Computing. Proc. ACM International Symposium on Field-Programmable Gate Arrays (FPGA), February 2012. (pdf)

- Eric S. Chung. CoRAM: An In-Fabric Memory Architecture for FPGA-Based Computing. PhD Thesis, August 2011. (pdf)

- Eric S. Chung, James C. Hoe, and Kenneth Mai. CoRAM: An In-Fabric Memory Architecture for FPGA-based Computing. Proc. ACM International Symposium on Field-Programmable Gate Arrays (FPGA), pp 97~106, February 2011. (pdf)

- Eric S. Chung, Peter A. Milder, James C. Hoe, and Kenneth Mai. Single-chip Heterogeneous Computing: Does the future include Custom Logic, FPGAs, and GPUs? Proc. International Symposium on Microarchitecture (MICRO), pp 53~64, December 2010. (pdf)

- James C. Hoe. High-level Programming for Reconfigurable Computing. 1996. (pdf) The write-up for MIT CS “Area Exam”.