Hyunggic !

Multi-Sensor Fusion for Moving Object Detection and Tracking

Detect and track multiple moving objects such as pedestrians, bicyclists, and vehicles using multiple heterogeneous sensors mounted on a moving vehicle ! I've been designing and implementing a new tracking system for autonomous vehicles based on Boss's tracking system. This new tracking system extends our previous tracking system, which was optimized for the Urban Challenge competition, into a practical system for real urban environments. In addition, this new tracking system works with all production grade LIDAR and radar sensors without a high-definition 3D Lidar sensor (i.e., Velodyne HDL-64). This enables a very neat appearance of the vehicle but also imposes lots of challenges on its perception system, especially for a moving object tracking system. To overcome this, we fuse object detection results from vision tasks into the tracking system so that we can get reliable object classification and height estimation in addition to fast position and velocity estimation for moving objects.

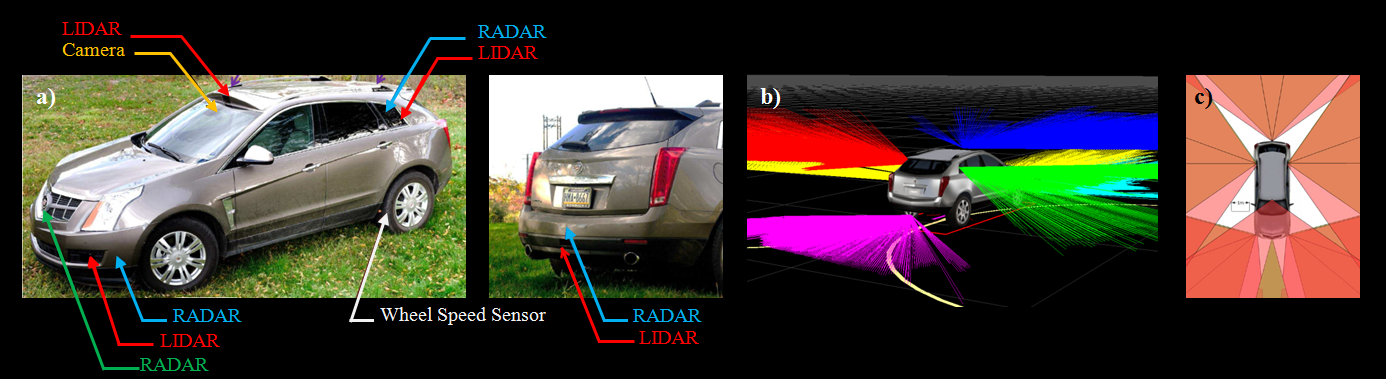

This tracking system has been evaluating with our self-driving vehicle, Autonomous Caillac SRX4. The research platform was developed by our research group at CMU (a.k.a ADCRL, Autonomous Driving Collaboration Research Lab.). Our team performed an amazing engineering work for building a beautiful self-driving vehicle as shown below. Some important aspects of the efforts were introduced in the following paper:

Towards a Viable Autonomous Driving Research Platform

Junqing Wei, Jarrod M. Snider, Junsung Kim, John M. Dolan, Raj Rajkumar and Bakhtiar Litkouhi

IEEE Intelligent Vehicle Symposium, 2013

[Sensor Configuration of Our Autonomous SRX]

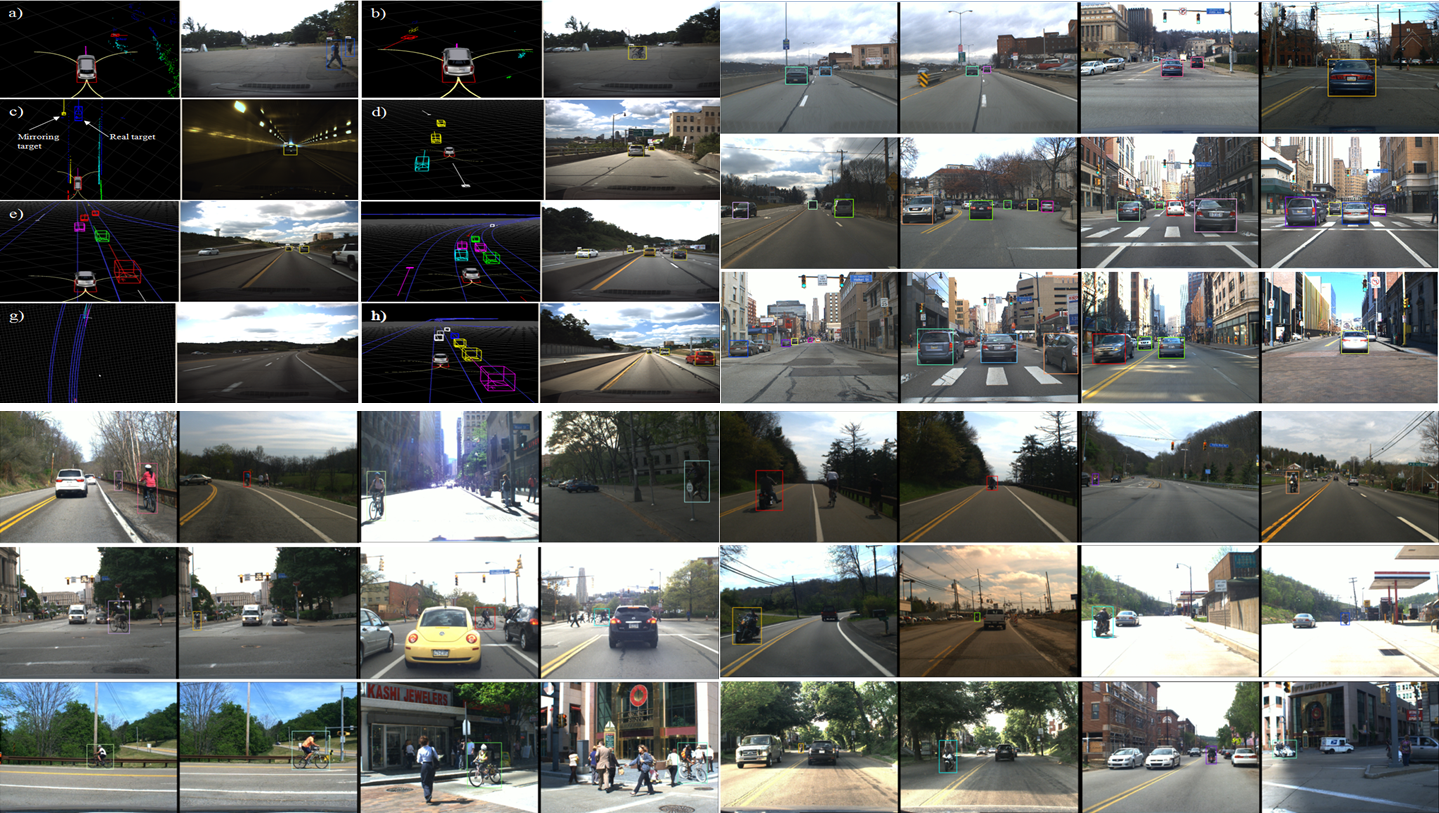

[Cool Pictures of Our Autonomous SRX]

The following list of papers/systems basically shows the history of its developement.

|

H. Cho, Wende Zhang, Dan Levi, and B.V.K. Vijaya Kumar |

|

|

H. Cho, Young-Woo Seo, B.V.K Vijaya Kumar, and Raj (Ragunathan) Rajkumar, IEEE International Conference on Robotics and Automation, Hong Kong, China, 2014 (accepted) |

[Selected Videos]

1. Moving object tracking result on CMU-to-Airport Dataset: Part3 Tracking with RNDF

2. Moving object tracking result on CMU-to-Airport Dataset: Part2 Tracking without RNDF

3. Moving object tracking result on CMU-to-Airport Dataset: Part1 Tracking without RNDF

4. Moving object tracking result on CMU-to-Airport Dataset: Lidar and Radar-based tracking performance without vision fusion, mirroring target issue

Click here for a full list of publications