Learning Class-Specific Affinities for Image

Labelling

People:

Dhruv Batra, Rahul Sukthankar, Tsuhan ChenKeywords:

Learning non-parametric class-specific affinities, Parameter learning in CRFs, Joint segmentation and recognition of object classes, Supervised segmentationAbstract

Spectral clustering and eigenvector-based methods have become increasingly popular in segmentation and recognition. Although the choice of the pairwise similarity metric (or affinities) greatly influences the quality of the results, this choice is typically specified outside the learning framework. In this paper, we present an algorithm to learn class-specific similarity functions. Mapping our problem in a Conditional Random Fields (CRF) framework enables us to pose the task of learning affinities as parameter learning in undirected graphical models. There are two significant advances over previous work. First, we learn the affinity between a pair of data-points as a function of a pairwise feature and (in contrast with previous approaches) the classes to which these two data-points were mapped, allowing us to work with a richer class of affinities. Second, our formulation provides a principled probabilistic interpretation for learning all of the parameters that define these affinities. Using ground truth segmentations and labellings for training, we learn the parameters with the greatest discriminative power (in an MLE sense) on the training data. We demonstrate the power of this learning algorithm in the setting of joint segmentation and recognition of object classes. Specifically, even with very simple appearance features, the proposed method achieves state-of-the-art performance on standard datasets.

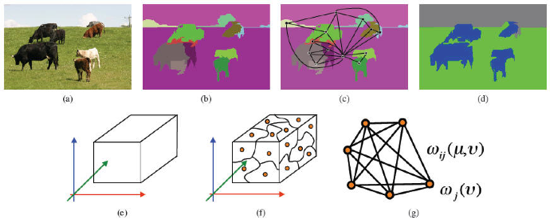

Figure

1: The need for class-specific affinities: the

affinity between “blue” and “white” regions should be

high for images in the top row (those colors occur

together in street signs); the same affinities should

be low for images in the bottom row to enable white

buildings and birds to be segmented from blue

sky.

Figure

2: Overview of our approach: (a) an input image; (b)

superpixels extracted from this image; (c) region

graph G constructed over those superpixels; (d)

optimal labelling of the image; (e) visualization of

raw feature space F; (f) visual words extracted in

this feature space; (h) shows the complete graph Gf

over these visual words, along with weights on nodes

and edges. Unlike previous work, we employ

class-specific edge weights.

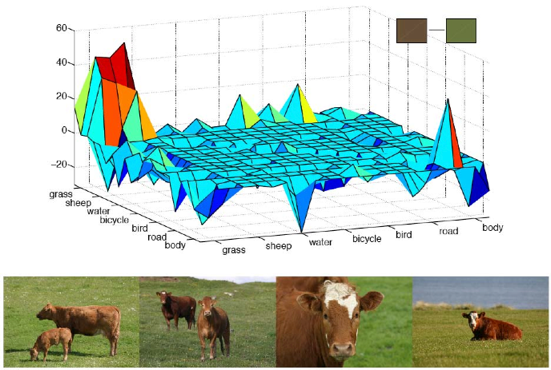

Figure

3: Class-Specific Affinities: plot (top) shows the

learnt affinities between a pair of visual words

(brown–green) as a function of classes; bottom rows

shows images containing the pair of classes

(cow–grass) under which these visual words had the

highest affinity between them.

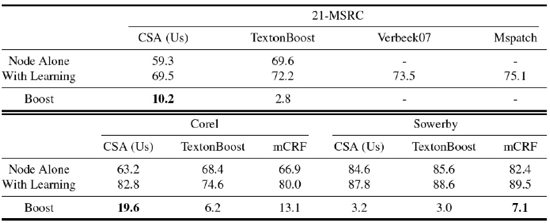

Table

1: Comparison of our method (CSA) with other works;

first row holds accuracies achieved by node features

alone; second row shows accuracies by using the

overall learning framework; and the third row shows

the gain.

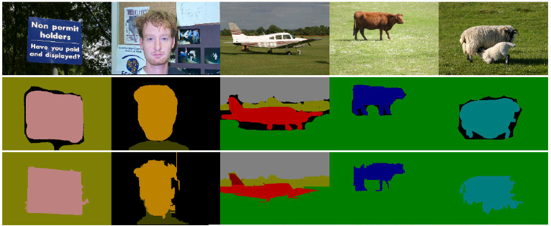

Figure

4: Example results on the MSRC dataset: Top row shows

original images; middle row shows ground-truth; and

bottom row shows our results.

Publications:

Dhruv Batra, Rahul Sukthankar, and Tsuhan Chen. Learning Class-Specific Affinities for Image Labelling. IEEE Conference on Computer Vision and Pattern Recognition 2008 (CVPR '08).

[ pdf ]

Dhruv Batra, Rahul Sukthankar, and Tsuhan Chen. Learning Class-Specific Affinities for Image Labelling. Carnegie Mellon University Technical Report: AMP08-01.

[ pdf | amp tech reports ]