Assistive Technologies

Eye of the Beholder (text-recognition for the visually-impaired)

Blind and visually-impaired people cannot access essential information in the form of written text in our environment (e.g., on restaurant menus, elevators, street signs, door labels, product names and instructions, expiration dates). Visually impaired people can sometimes sense the presence of such objects, but they cannot read the text written on them.

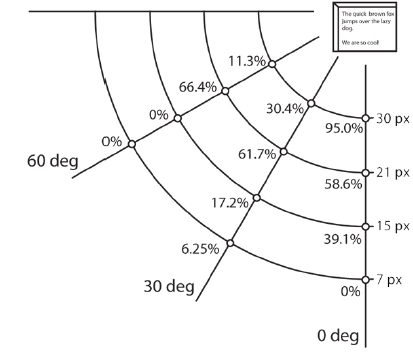

Recognition accuracy

Recognition accuracy

When I gave a demo of Eye of the Beholder, at ISWC 2006, many people asked me when the system will become available to the public. Nowadays this functionality is provided by Google Goggles. Interestingly, in spite of major improvements in smartphone hardware (e.g., better cameras, 13× faster CPUs, availability of GPS receivers), many of the lessons learned from our project still stand. For example, text recognition is done at server-side, the photos must have VGA resolution and the recognition latency is still about 2–3 s. Goggles has a similar recognition accuracy as our system, but it can put the results in context by using additional sources of information, such as GPS data or image recognition (trained using Google's image database).

Color-Blindness Correction System

Tests performed during the past 50 years on the few people with one normal eye and another one affected by colorblindness have lead to the creation of an approximate model for simulating the effects of this genetic deficiency. Based on this model, we have discovered that, by applying color-space filtering and processing, images can be enhanced for the color-blind vision. In normal images, there may be some patterns that are completely hidden for an eye with deficient vision. However, by applying our technique, these patterns are revealed, with only minimal changes to the content of the images.

Publications

-

T. Dumitraş, M. Lee,

P. Quinones, A. Smailagic, D. Siewiorek and P. Narasimhan

Eye of the Beholder: Phone-Based Text-Recognition for the Visually-Impaired

Poster Session at ISWC 2006