Projects

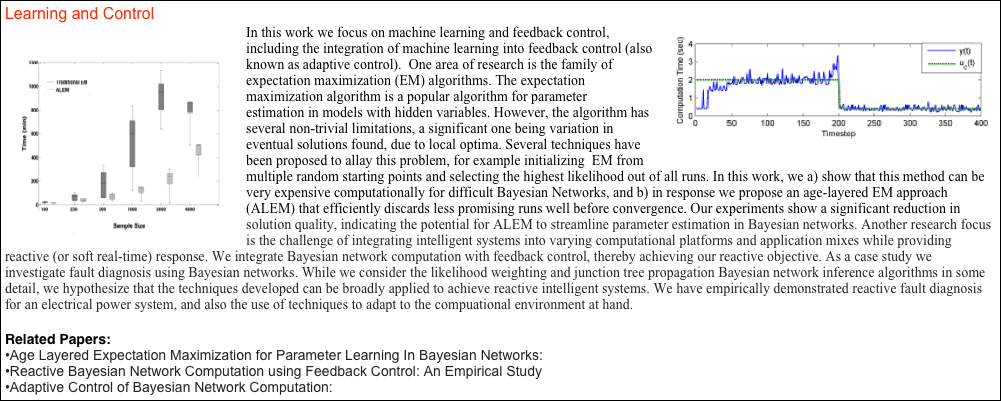

Parallel and Distributed Algorithms for Intelligent Systems

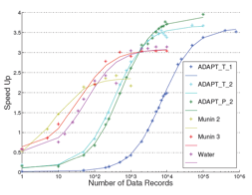

In the area of parallel and distributed algorithms for intelligent systems, we take advantage of opportunities that have emerged due to recent dramatic improvements in parallel and distributed hardware and software. The emergence of graphics processing units (GPUs) as a general computing platform is one important trend; another is the introduction of the MapReduce architecture along with its open-source Hadoop implementation. We have taken advantage of GPUs to perform faster junction tree propagation through parallelism. Compiling Bayesian networks (BNs) to junction trees and performing belief propagation over them is among the most prominent approaches to computing posteriors in BNs. We develop data structures and algorithms that extend existing junction tree techniques, and specifically develop a novel approach to computing each belief propagation message in parallel. We have so far achieved speedups up to approximately 10x; however the speedup depends strongly on the structure of the junction tree. Using MapReduce and Hadoop, we have developed developed Bayesian network parameter learning algorithms and shown how they can dramatically speed up machine learning, both for complete and incompleted data sets. We have also developed a distributed variant of the text mining system Unsupervised Semantic Parsing (USP), which we call Distributed Unsupervised Semantic Parsing (DUSP). DUS improves on USP's ability to handle large text corpora by distributing several of USP’s key algorithmic steps over a cluster of commodity computers. In experiments with DUSP we processed a corpus that was over 13 times larger than the largest corpus we were able to handle using USP.

Related Papers:

-

•Belief Propagation by Message Passing in Junction Trees: Computing Each Message Faster Using GPU Parallelization: http://works.bepress.com/ole_mengshoel/8/

-

•Distributed Unsupervised Semantic Parsing of Large-Scale Document Corpora:

-

•Accelerating Bayesian Network Parameter Learning Using Hadoop and MapReduce:

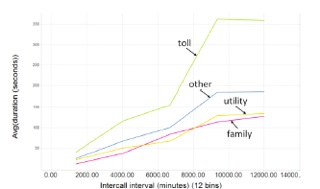

Social Networks: Data Analysis and Machine Learning

Social network analytics, machine learning, and visualizaiton is one of our research areas. We have analyzed a large mobile phone dataset, consisting of millions of call data records (CDRs), provided by one of the major telecom operators. Using this dataset, we have investigated the different social connections or ties that are reflected in such datasets.

Social ties defined by phone calls made between people can be grouped to various affinity networks, such as family members, utility network, friends, coworkers, etc. An understanding of call behaviour within each social affinity network and the ability to infer the type of a social tie from call patterns is invaluable for various industrial purposes. We analyzed the

patterns of 4.3 million phone call data records produced by 360,000 subscribers from two California cities, San Francisco and Modesto, and found features that are highly predictive of the type of social tie, independent of the city. Armed with this knowledge we also have identified promising machine learning classification approaches as well as several potential applications in telecom and security.

Related Papers:

-

•The Impact of Social Affinity on Phone Calling Patterns: Categorizing Social Ties From Call Data Records:

Understanding the Complexity of Bayesian Network Computation

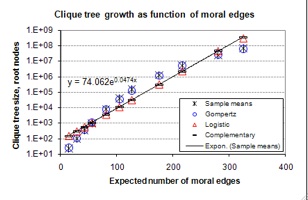

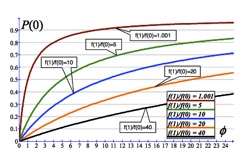

This Project presents and analyzes algorithms that systematically generate random Bayesian networks of varying difficulty levels, with respect to inference using tree clustering. Our generation algorithms, called BPART and MPART, support controlled but random construction of bipartite and multipartite Bayesian networks. The results are relevant to research on efficient Bayesian network inference, such as computing a most probable explanation or belief updating. The results are also relevant to research on machine learning of Bayesian networks, since they support controlled generation of a large number of data sets at a given difficulty level. One of the main approaches to performing computation in Bayesian networks (BNs) is clique tree clustering and propagation we improve this understanding by developing an approach to characterizing clique tree growth as a function of parameters that can be computed in polynomial time from BNs, specifically the ratio of the number of a BN’s non-root nodes to the number of root nodes, and the expected number of moral edges in their moral graphs. Surprisingly, root clique growth is well-approximated by Gompertz growth curves, an S-shaped family of curves that has previously been used to describe growth processes in biology, medicine, and neuroscience. As well in this project we are concerned with the conceptual design of large-scale diagnostic and health management systems that use Bayesian networks.While they are potentially powerful, improperly designed Bayesian networks can result in too high memory requirements or too long inference times We investigate the clique tree clustering approach to Bayesian network inference, where increasing the size and connectivity of a Bayesian network typically also increases clique tree size. We provide both theoretical and experimental results.

Related Papers:

-

•Controlled Generation of Hard and Easy Bayesian Networks: Impact on Maximal Clique Size in Tree Clustering: http://works.bepress.com/ole_mengshoel/9/

-

•Understanding the Scalability of Bayesian Network Interference Using Clique Tree Growth Curves: http://works.bepress.com/ole_mengshoel/5/

-

•Designing Resource-Bounded Reasoners using Bayesian Networks: System Health Monitoring and Diagnosis: http://works.bepress.com/ole_mengshoel/10/

Stochastic Optimization Using Stochastic Local Search

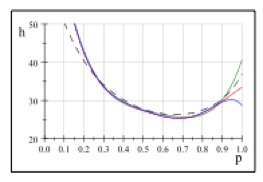

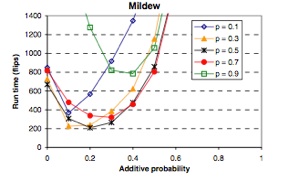

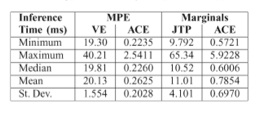

Portfolio methods support the combination of different algorithms and heuristics, including stochastic local search (SLS) heuristics, and have been identified as a promising approach to solve computationally hard problems. While successful in experiments, theoretical foundations and analytical results for portfolio-based SLS heuristics are less developed. Analytically, we introduce a novel Markov chain model tailored to portfolio-based SLS algorithms including SGS, thereby enabling us to analytically form expected hitting time results that explain empirical run time results.For hard computational problems, stochastic local search has proven to be a competitive approach to finding optimal or approximately optimal problem solutions. We introduce a novel approach, based on the Viterbi algorithm, to explanation initialization in BNs. While the Viterbi algorithm works on sequences and trees, our approach works on BNs with arbitrary topologies. We also give a novel formalization of stochastic local search, with focus on initialization and restart, using probability theory and mixture models. Experimentally, we apply our methods to the problem of MPE computation, using a stochastic local search algorithm known as Stochastic Greedy Search. On several BNs from applications, the performance of Stochastic Greedy Search is competitive with clique tree clustering, a state-of-the-art exact algorithm used for MPE computation in BNs. Stochastic local search (SLS) algorithms have recently been proven to be among the best approaches to solving computationally hard problems. SLS algorithms typically have a number of parameters, optimized empirically, that characterize and determine their performance. To complement existing experimental results, we formulate and analyze several Markov chain models of SLS in this article. In particular, we compute expected hitting times and show that they are rational functions for individual problem instances as well as their mixtures.We believe that our results provide an improved theoretical understanding of the role of noise in stochastic local search, thereby providing a foundation for further progress in this area.

Related Papers:

-

•Portfolios in Stochastic Local Search: Efficiently Computing Most Probable Explanations in Bayesian Networks: http://works.bepress.com/ole_mengshoel/3/

-

•Initialization and Restart in Stochastic Local Search: Computing a Most Probable Explanation in Bayesian Networks: http://works.bepress.com/ole_mengshoel/11/

-

•Understanding the Role of Noise in Stochastic Local Search: Analysis and Experiments: http://works.bepress.com/ole_mengshoel/2/

Stochastic Optimization Using Genetic Algorithms

Related Papers:

-

•Generalized Crowding for Genetic Algorithms: http://works.bepress.com/ole_mengshoel/20/

-

•Probabilistic Crowding: Deterministic Crowding with Probabilistic Replacement: http://works.bepress.com/ole_mengshoel/14/

-

•The Crowding Approach to Niching in Genetic Algorithms: http://works.bepress.com/ole_mengshoel/15/

-

•Constraint Handling Using Tournament Selection: Abductive Inference in Partly Deterministic Bayesian Network: http://works.bepress.com/ole_mengshoel/21/

-

•A Novel Mating Approach for Genetic Algorithms:

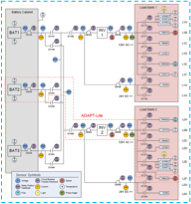

Fault Diagnosis Using Bayesian Networks

Related Papers

-

•Integrating Probabilistic Reasoning and Statistical Quality Control Techniques for fault Diagnosis in Hybrid Domains: http://works.bepress.com/ole_mengshoel/18/

-

•Probabilistic Model-Based Diagnosis: an Electrical Power System Case Study: http://works.bepress.com/ole_mengshoel/4/

-

•A Tutorial on Bayesian Networks for System Health Management: http://works.bepress.com/ole_mengshoel/24/

-

•Diagnosis Faults in Electrical Power Systems of Spacecraft and Aircraft: http://works.bepress.com/ole_mengshoel/1/

-

•Diagnosing Intermittent and Persistent Faults Using Static Bayesian Networks: http://works.bepress.com/ole_mengshoel/7/

-

•Developing Large-Scale Bayesian Networks by Computation: Fault Diagnosis of Electrical Power Systems in Aircraft and Spacecraft: http://works.bepress.com/ole_mengshoel/26/

-

•Methods for Probabilistic Fault Diagnosis: An Electrical Power System Case Study:

-

•Diagnosis and Reconfiguration using Bayesian Networks : An Electrical Power System Case Study: http://works.bepress.com/ole_mengshoel/25/

-

•Using Bayesian Networks for Candidate Generation in Consistency-Based Diagnosis:

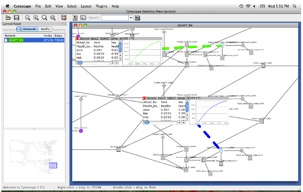

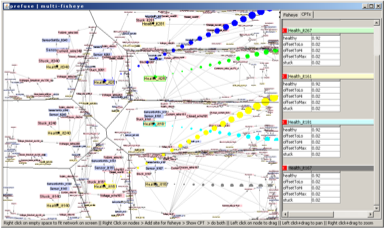

Visual Analytics for Networks

Related Papers:

-

•Visualizing and Understanding Large-Scale Bayesian Networks: http://works.bepress.com/ole_mengshoel/23/

-

•Multi-Fisheye for Interactive Visualization of Large Graphs:

Verification and Validation

Related Papers:

-

•Verification and Validation of System Health Management Models using Parametric Testing: http://works.bepress.com/ole_mengshoel/13/

-

•A Framework for Systematic Benchmarking of Monitoring and Diagnostic Systems: http://works.bepress.com/ole_mengshoel/27/

-

•Who Guards the Guardians?: Toward Verification and Validation of health Management Software:

-

•Advanced Diagnostics and Prognostics Testbed:

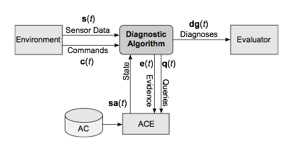

Software and Sensor Health Management

In this project, we focus on the use of Bayesian networks (BNs) to monitor the health of on-board software and sensor systems, and to perform advanced on-board diagnostic reasoning. Advanced compilation techniques are used to obtain a compact SSHM (Software and Sensor Health Management) system with a powerful reasoning engine, which can run in an embedded software environment and is amenable to V&V. We successfully demonstrate our approach using an OSEK-compliant operating system kernel, and discuss in detail several nominal and fault scenarios for a small satellite simulation with a simple bang-bang controller. In this project we use Bayesian networks to 1) monitor the health of the on-board software and sensor system and 2) perform advanced on-board diagnostic reasoning.We focus on the development of reliable and robust health models for combined software and sensor systems, with application to guidance, navigation, and control (GN&C).We propose that iSWHM (Integrated SoftWare Health Management) can increase safety and reliability of high-assurance software systems. iSWHM uses advanced techniques from the area of system health management in order to continuously monitor the behaviour of the software during operation, quickly detect anomalies and perform automatic and reliable root-cause analysis, while not replacing traditional V&V. Information provided by the iSWHM system can be used for automatic mitigation mechanisms (e.g., recovery, dynamic reconfiguration) or presented to a human operator. In this project a probabilistic approach, using Bayesian networks, to diagnosis and sensor validation, and investigate several relevant but slightly different Bayesian network queries.

Related Papers:

-

•Integrated Software and Sensor Health Management for Small Spacecraft: http://works.bepress.com/ole_mengshoel/16/

-

•Bayesian Software Health Management for Aircraft Guidance, Navigation, and Control: http://works.bepress.com/ole_mengshoel/17/

-

•Software Health Management with Bayesian Networks: http://works.bepress.com/ole_mengshoel/19/

-

•Towards Software Health Management with Bayesian Networks: http://works.bepress.com/ole_mengshoel/12/

-

•Sensor Validation Using Bayesian Networks: http://works.bepress.com/ole_mengshoel/22/

-

•Software Health Management: A Short Review of Challenges and Existing Techniques: