Abstract

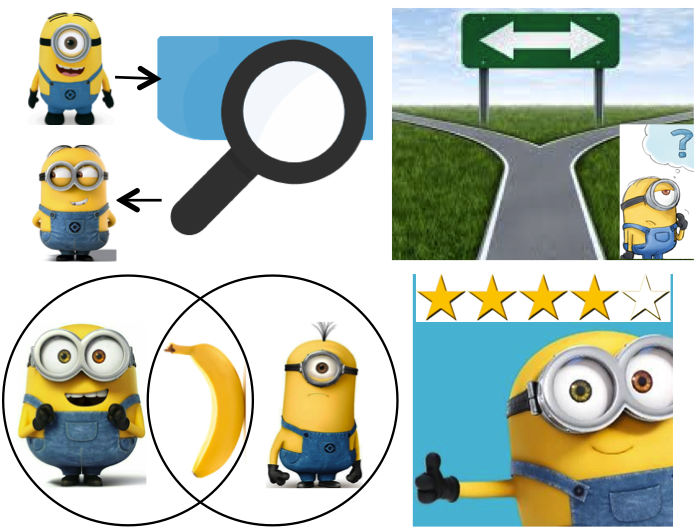

Modern On-Line Data Intensive (OLDI) applications have evolved from monolithic systems to instead comprise numerous, distributed microservices interacting via Remote Procedure Calls (RPCs). Microservices face single-digit millisecond RPC latency goals (implying sub-ms medians)---much tighter than their monolithic ancestors that must meet ≥ 100 ms latency targets. Sub-ms-scale OS/network overheads that were once insignificant for such monoliths can now come to dominate in the sub-msscale microservice regime. It is therefore vital to characterize the influence of OS- and network-based effects on microservices. Unfortunately, widely-used academic data center benchmark suites are unsuitable to aid this characterization as they (1) use monolithic rather than microservice architectures, and (2) largely have request service times ≥ 100 ms. In this paper, we investigate how OS and network overheads impact microservice median and tail latency by developing a complete suite of microservices called µSuite that we use to facilitate our study. µSuite comprises four OLDI services composed of microservices: image similarity search, protocol routing for key-value stores, set algebra on posting lists for document search, and recommender systems. Our characterization reveals that the relationship between optimal OS/network parameters and service load is complex. Our primary finding is that non-optimal OS scheduler decisions can degrade microservice tail latency by up to ∼87%.